My Workflow for Agentic Coding

I’ve experimented with agentic coding for a while and I’ve developed a set of techniques – and a bit of intuition – how to work with agent to deliver actual value without sacrificing my soul as a software engineer. Here’s one of them.

The process I follow consists of 4 steps:

- Talk

- ADR

- Plan

- Execute

We’ll discuss each of them in details. For simplicity, let’s assume I’ll add a new functionality to existing system. I use Cursor as agentic IDE, so it may influence the process by its system prompt.

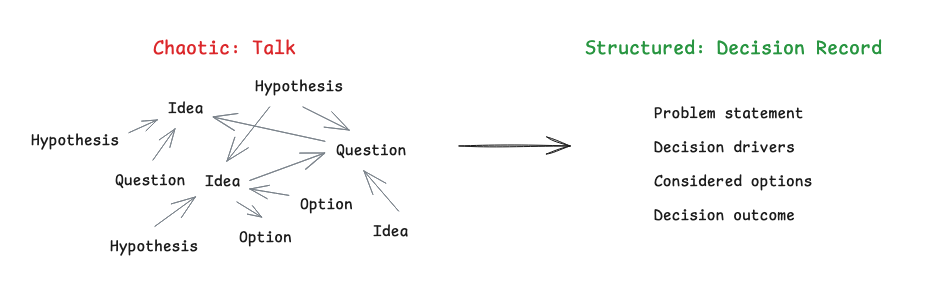

Talk

I start the session by talking to the agent. I describe

- what’s the problem,

- what’s the desired outcome,

- my hypotheses what we need to do to achieve the final functionality.

Then I ask the model to check those hypotheses by reading the existing files and form a sense of relationship between them. If the scope is known and small, I explicitly limit the agent to specific working directory. Otherwise, I intentionally force it to discover the structure, giving only a few keywords as a hint.

Then I talk about potential solutions, the same way I talk to my fellow – but at high level. It helps me organise my thoughts and enrich the context further. After a while, I ask agent for the feedback. Then I propose another solution.

The agent often continues my reasoning by writing example code. When I catch something tricky, e.g. potential performance issues, crossing domain boundaries or just misalignment with the original problem, I point that out, asking for clarification or change.

The talk lasts until I run out of the ideas – or time. For now, I have a pretty solid understanding of the problem space, potential solution space but to gain actual benefit from working with agent I ask about the last thing – the potential options I miss but they would fit as well. I often mention something like “think out of the box” to let the agent takes different perspective.

The agent prepares a list of alternative approaches. Some of them are totally abstract but other ones are worth consideration. So I continue the talking. I challenge a selected approach and I learn something new.

This session lasts from 15 minutes – for small features – to one hour – when working with architecture or infrastructural code. This co-operation with agent not only gives my another perspective but also point out other places potentially affected by the changes I want to introduce.

This talk is rich for useful information – problem, requirements, a few potential solution analysed and verified in the app-specific context. I don’t want to lose it, as this may be relevant for future changes as well. Or, it may be a solid initial context for another discussion. So I ask agent for creating the ADR from the session.

ADR - Architecture Decision Record

If you don’t know what is ADR, it’s a Markdown file sitting in the project repository, representing an significant architectural decision1 – decisions that are usually difficult to change as project grows.

- Should we use RabbitMQ or Kafka?

- Classic entity-powered CRUD or Event Sourcing?

Or smaller, domain-specific decisions like

- how to store calculations?

- how to model specific process?

- refactor to X, or just leave it as is?

Typical ADR consist of

- Problem and context

- Decision Drivers

- Considered options, with pros and cons of each

- Decision Outcome

After the previous step, the agent has most of the information to form a decent ADR. I try to maintain a consistent decision log, so I ask agent to use the template and fill it with relevant information.

I read the Decision Record and – to be honest – I prune most of it. The agent captures the context and problem statement correctly, but some considered options and their pros and cons required cleanup so I remove irrelevant bullet points, example code and other noise. I leave the core – what are the options, the best and worse from each, and brief example if it’s valid for the final decision.

I take the final decision with a bit of justification and I ask agent to update the ADR. We’re ready for the next step.

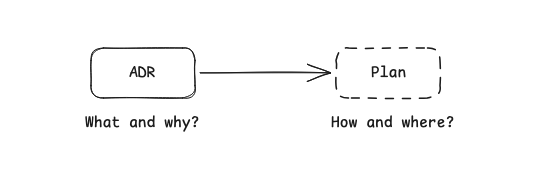

Plan

To avoid distracting the agent, I start a new conversation and include the newly created ADR. Some models, like Sonnet or Opus 4.5 have smaller context window, so more space is needed to avoid auto-summarisation.

I switch to the plan model and I ask agent to create a detailed plan for implementing the feature from the attached document. Sometimes, I add some details about tests or I point out specific directory but I like to let agent figure it out.

The ADR gives agent the specific instructions what is the desired state and what it should avoid. At this stage, I should not be surprised of the plan it proposes – they should follow the decision.

The plan usually contains specific files to create or modify, more or less detailed description of changes or even the ready-to-use code. Plan, comparing to ADR, it’s more sensitive – it refers to specific files, at specific locations, lines, etc. The plan for me is ephemeral. If I decide to postpone the change, I remove the plan but keep the ADR. ADR is about what and why, the plan is about how and where.

Yes, I read the plan. At this stage I want to be at least 90% sure that agent does what I want. It’s hard to be 100% sure when working with non-deterministic system. I talk with agent about the plan, ask for clarification, or I point it out to do things differently.

Last but not least - I try to close the loop by ensure that agent knows how to test their own changes. Sometimes I even let it commit, but I prefer to manually verify the outcome before.

From time to time I use Cursor feature and spin up two agents in separate work-trees to see which one propose a better plan. I just pick the better and continue as usual.

Execute

I let agent follow the plan while I grab the coffee and watch its during the work. Observing how agent read files and provide changes it’s sometimes breathtaking. But the more I do it this way, the new baseline for normal is set and I’m less surprised and more demanding.

As I said, I shouldn’t be surprised by the outcome. The agent does it job when it follows the plan and it provides the working code according to ADR and plan. Sometimes it modifies the place we never talk about that were somehow related by the type system or to support backward compatibility. Even in well-crafted plan there may be blind spots and it looks interesting when agent can find them anyway. And sometimes not. That’s the trick.

The finalisation is on my side. Quick checklist includes:

- reading the code and verify results

- committing the work or letting agent commit – thanks to rich context agents provides a detailed commit messages.

- prepare the pull request, with the help of agent or not

This is TAPE

Talk, ADR, Plan, and Execution. This four step process gives me a fresh perspective and let me deliver faster. It works well in my current environment with things I’m working on. It surely doesn’t fit in every case, and this is the intuition I developed whether go with it or not.

Is it effective? I don’t know, but for me it’s impressive. I can freely form my thoughts in discussion with agent and it properly captures them, structures and follows. What matters more is the cost. I experimented with the frontier models with thinking capabilities, and some sessions costed at least a few bucks – maybe I just talked too much.

With agents or not, I think documenting your decisions is a good practice. At least those significant ones.

-

ADR sometimes refer to Any Decision Record, may fit well if you want to store decision not strictly related to the architecture. ↩︎

Enjoyed this post?